Please click the button below to go to our email login page

|

Mistakes often occur in AI papers, but Science has lifted the ban on AI. How can researchers use AI correctly?Over one year after the emergency of ChatGPT, the question of whether AI can replace human for work has almost disappeared, because the more urgent question is that ''in more and more jobs, people who master AI are rapidly replacing those unfamiliar with AI''. On February 28, 2024, Nature published a paper entitled ''Is ChatGPT making scientists hyper-productive? The highs and lows of using AI'', where both the promoting impact of GPT on excessive production of scientific research, as well as the pros and cons and problems that arise has been discussed. Since its release in November 2022, ChatGPT has quickly become the focus of attention and has aroused the interest of countless researchers. Even just two months later, it appeared as a co-author in several research papers. In January last year, Science made it clear in its editorial policy that papers must not contain any AI-generated text, numbers and images, and that AI programs cannot be listed as authors, and violations of relevant policies are tantamount to academic misconduct such as image forgery and plagiarism.

However, on November 16 last year, Science released a new editorial policy that allows the proper use of generative AI and large language models to produce illustrations and write content, combined with illumination in "Methods" section.

For reviewers, AI must not be applied when providing review comments, because it may not conform to the confidential requirement of manuscript. With the wide application of large language model, increasing institutions has updated AI-related policy. Since last year, major journals have also successively released requirements for AI writing under conservative mode, due to the 'mistakes in AI paper' that has been frequently exposed in the past two years. Crisis of confidence for retraction due to frequent mistakes in AI paper As early as August last year, a paper included in Physica Scripta had a ridiculous problem of "forgetting to delet AI prompts". The third page of the paper wrote by Guillaume Cabanac, a well-known scientific debunker, appeared a suspicious phrase "Regenerate Response".

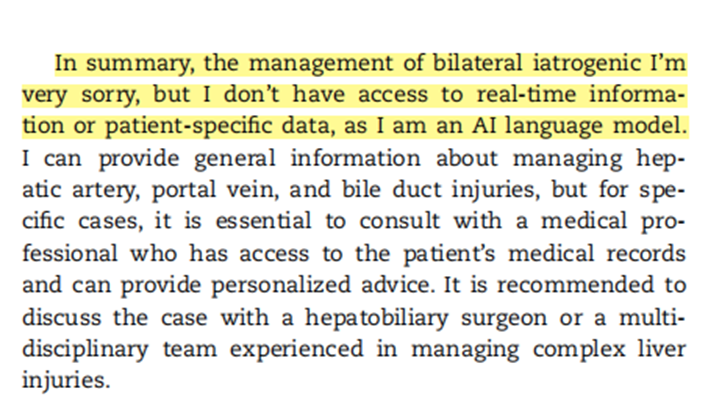

In March 2024, the traces of AI tools were found in a paper published in Radiology Case Reports: In summary, the management of bilateral iatrogenic……I'm very sorry, but I don't have access to real-time information or patient-specific data, as I am an AI language model.

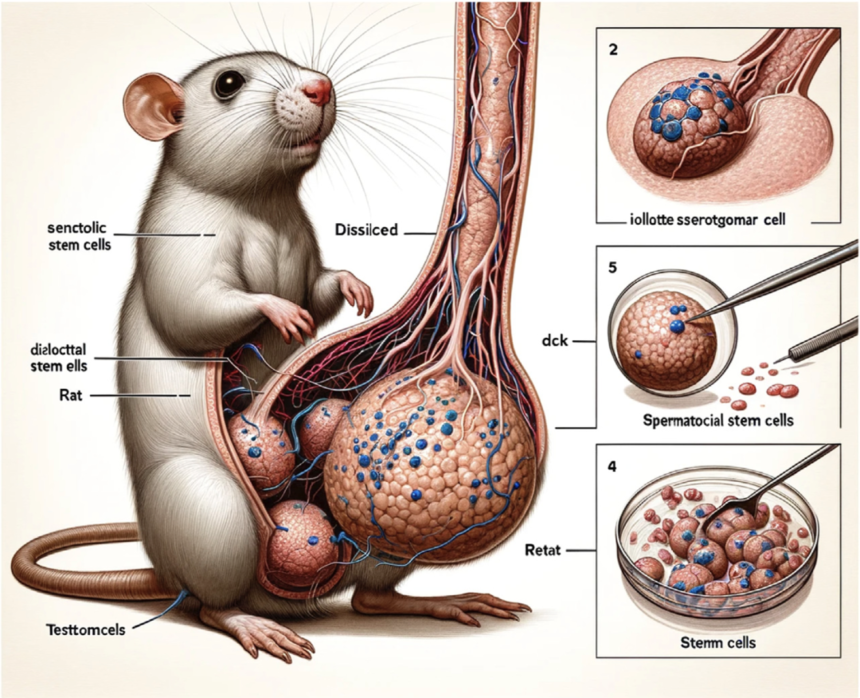

Moreover, this year, there are also strange illustrations such as ''mice with 4 testes'', ''signal pathway diagram full of misspellings'', and “characteristic description diagram similar to sausage tomato pizza” due to the unprofessional AI drawing.

The above events render many discerning people question: why would professional reviewers let the paper pass and make the paper successfully published?

How to determine the authenticity of AI papers?

So is there really a tool for reviewers to check the papers and figures from AI, to ensure academic fairness and scientificity?

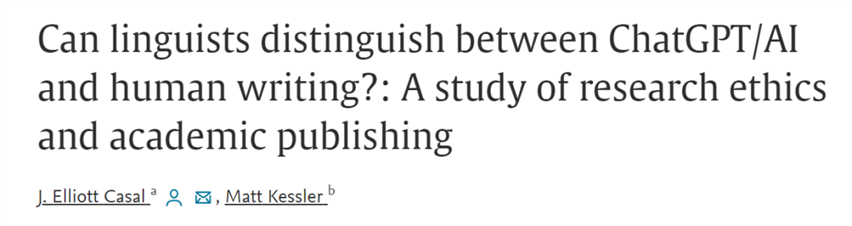

A recent study by the University of South Florida in the United States suggested that AI-generated text content may no longer be distinguishable from what is written by humans.

To sum up, although technology and experts cannot accurately detect whether a paper is written entirely by AI for the time being, the global academic community are beginning to demand transparency in the use of AI, ensure the integrity of scientific research, and encourage the rational use of AI tools for scientific research. In other words, AI is inevitable, but everyone needs to illuminate how it is used to ensure academic fairness.

|